(see (3) in A Page in my Notebook [2])

That is,

Exercise-1 Show that

Exercise-2 Generating

It follows that by An Analytic Proof of the Extraordinary Euler Sum,

Similarly,

It is also true that

We demonstrate (3) through the following steps:

Exercise-1 Show that

(If you are considering rewrite as

, don’t! Use (1) and (2) instead)

Exercise-2 Is ?

Introducing Feynman’s Integral Method

Michael Xue

Indiana Section MAA Spring 2024 MEETING

Marian University – Indianapolis

April 5-6, 2024

“The first principle is that you must not fool yourself and you are the easiest person to fool.”

— Richard Feynman

This is one of my favorite quotes because it speaks to the importance of humility when approaching mathematics and science. To me, it means not being constrained by my existing knowledge or beliefs, nor by previous learning or observations of others’ methods. It’s about freeing my mind to view things from a entirely new perspective.

Feynman’s Integral Method

Leibniz : “”

Feynman: “To evaluate hard differentiate under the integral sign!”

Feynman’s integral method is a potent mathematical technique developed to simplify complex integrals, often transforming them into more manageable forms. This method, pioneered by Richard Feynman, effectively brought Leibniz’s theorem into practical use, showcasing its real-world applicability in solving intricate mathematical problems.

Two algorithms implementing Feynman’s Integral method

Algorithm #1 Reducing

Algorithm #2 Generating from a known definite integral

Like many other mathematicians and physicists, I’ve extensively experimented with Feynman’s method in solving numerous problems. Through a gradual, inductive approach, I’ve discerned patterns that allowed me to synthesize these into two efficient algorithms. Today’s talk will focus on these algorithms, demonstrating their effectiveness in implementing Feynman’s method. I’ll provide multiple examples to illustrate how these algorithms can be used to solve a wide range of mathematical problems.

Example-1

Evaluate

Example-2

Evaluate

Example-3

Evaluate

For

Using traditional methods, you often encounter problematic forms like 0 multiplied by infinity. However, Feynman’s method effectively circumvents this issue without the need to invoke L’Hôpital’s rule.

Example-4

Given find

While preparing for this talk last weekend, I developed an example and here is an abbreviated version of the proof to highlight the key steps.

Example-5

Prove

Since

to prove , it suffices to demonstrate that

For full-length proof, read my posting “An Analytic Proof of the Extraordinary Euler Sum” on

https://nuomegamath.wordpress.com/2024/04/02

Many of you may know Dr. Zeilberger, “a champion of using computers and algorithms to perform mathematics quickly and efficiently.” (Wikipedia) You can find more about his work on his website. After completing the proof a few days ago, I reached out to him for a review. He graciously agreed to do so at short notice. Dr. Zeilberger is known for his straightforward style, always telling it like it is.

Dr. Zeilberger, Professor at Rutgers University.

https://sites.math.rutgers.edu/~zeilberg/

I would like to revisit example 3 and solve it using a different approach than Feynman’s. This aligns with Feynman’s fundamental principle of not fooling oneself and emphasizes the importance of viewing problems from entirely new perspectives.

Question: WWFD?

Answer: I see and

Since for

I have

so

In Deriving the Extraordinary Euler Sum , we derived one of Euler’s most celebrated results:

Now, we aim to provide a rigorous proof of this statement.

First, we expressed the partial sum of the left-hand side as follows:

This splits the partial sum into two parts: one involving the squares of even numbers and the other involving the squares of odd numbers. Simplifying the even part gives us:

Rearranging terms on one side, we obtain:

Since converges to

(see My Shot at Harmonic Series)

converges to

to prove (1), it suffices to demonstrate that

or equivalently,

Let and

we have

and

Differentiating with respect to

That is,

Integrating with respect to from

to

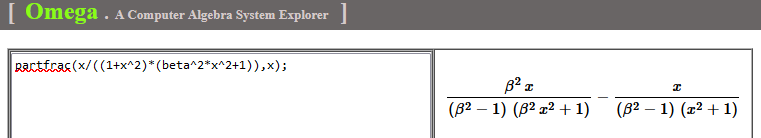

and expressing the integrand in partial fractions:

yields

i.e.,

By (3) and (4),

or

which is (2)

Prove

Since

means

we have

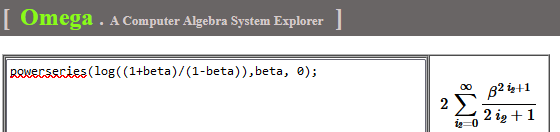

Expand into its Maclaurin series:

Prove

For

We have

As a result,

i.e.,

Moreover,

Let and

gives

It follows that

For

Let

I see and

Since for

I have

so

.

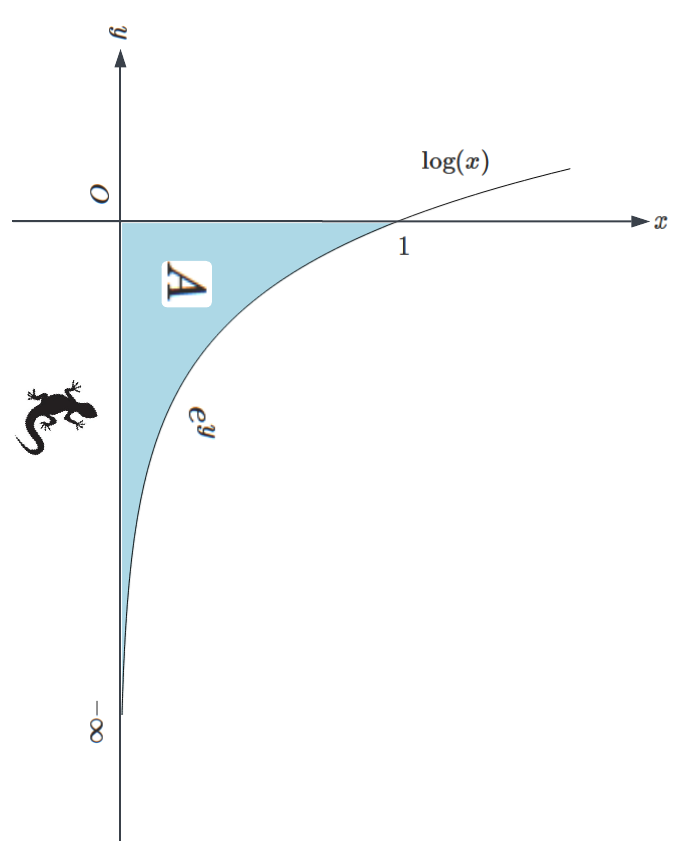

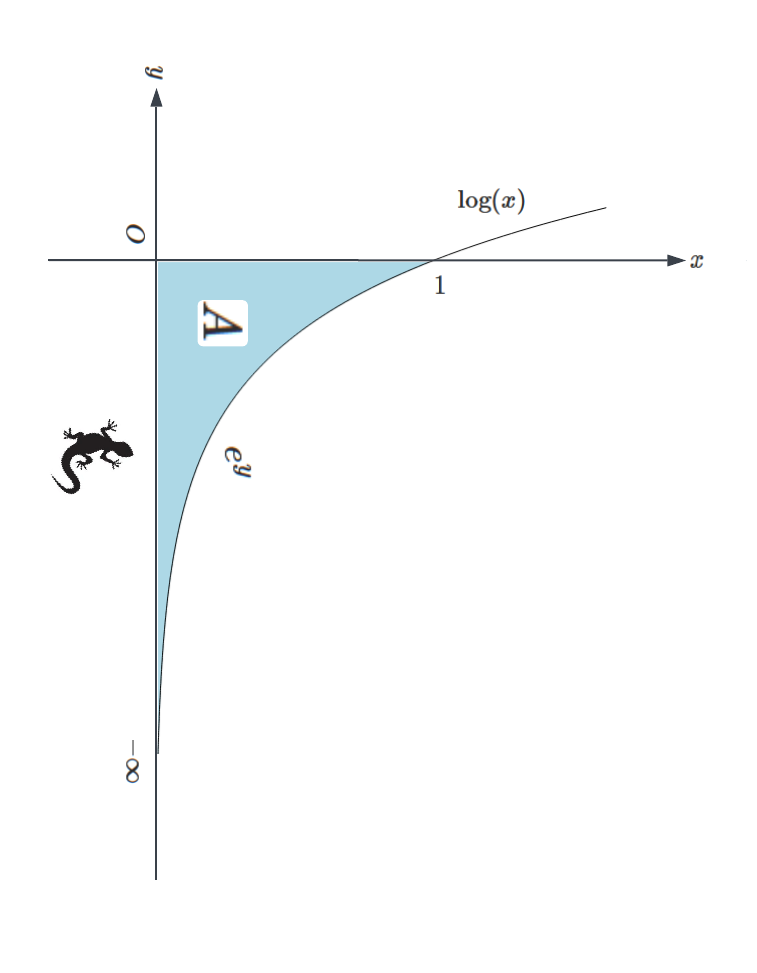

In a 1905 article, Charles-Ange Laisant, a French politician and mathematician, introduced the following theorem:

Given a function with inverse

, then

where is an arbitrary real constant.

It can also be stated equivalently as:

Given a function with inverse

then

where is an arbitrary real constant.

Moreover, this theorem gives

and

Frequently, obtaining an antiderivative for is relatively easier than finding one for

. In such instances, substituting the integrals of

with integrals involving

can be advantageous.

For example, let we have

As a result,

That is,

Another illuminating example is as follows:

Since we have

Prove

is an antiderivative of

Therefore,

Prove

By (2),

Prove

Exercise-1 Prove (2)

Exercise-2 Prove (3)

Exercise-3 What is

(Hint: )

Exercise-4 Explain and

Exercise-5 Show that (2) can be written as

And, prove

Exercise-6 Derive (1) (Hint: The foundation of a technique for evaluating definite integrals and Integration by Parts Done Right)

Prove:

For any positive number , let

Since is a monotonic increasing function:

we have

That is,

or

By the definition of :

we also have

Combining (1) and (2) gives

This implies that

In other words,

See also The Sandwich Theorem for Functions 2.

Evaluate

This integral is known as the Dirichlet Integral, named in honor of the esteemed German mathematician Peter Dirichlet. Due to the absence of an elementary antiderivative for the integrand, its evaluation by applying the Newton-Leibniz rule renders an impasse. However, the Feynman’s integral technique offers a solution.

The even nature of the function implies that

Let’s consider

and define

We can differentiate with respect to

Hence, we find

Integrating with respect to from

to

gives

Since

and

,

we arrive at

It follows that by (*):

Show that

From the inequality

and

(see (3) in A Proof without Calculus),

we deduce that

That is,

By the Sandwich Theorem for Functions 2,

Consequently,

Show that

That is,

Therefore,

Exercise-1 Evaluate by the schematic method (hint: Schematic Integration by Parts)

For given functions and

The given condition gives

and

It means

Since we have

That is,

Or,

And so,

See also Sandwich Theorems and Their Proofs.

Exercise-1 Prove

(Hint: )